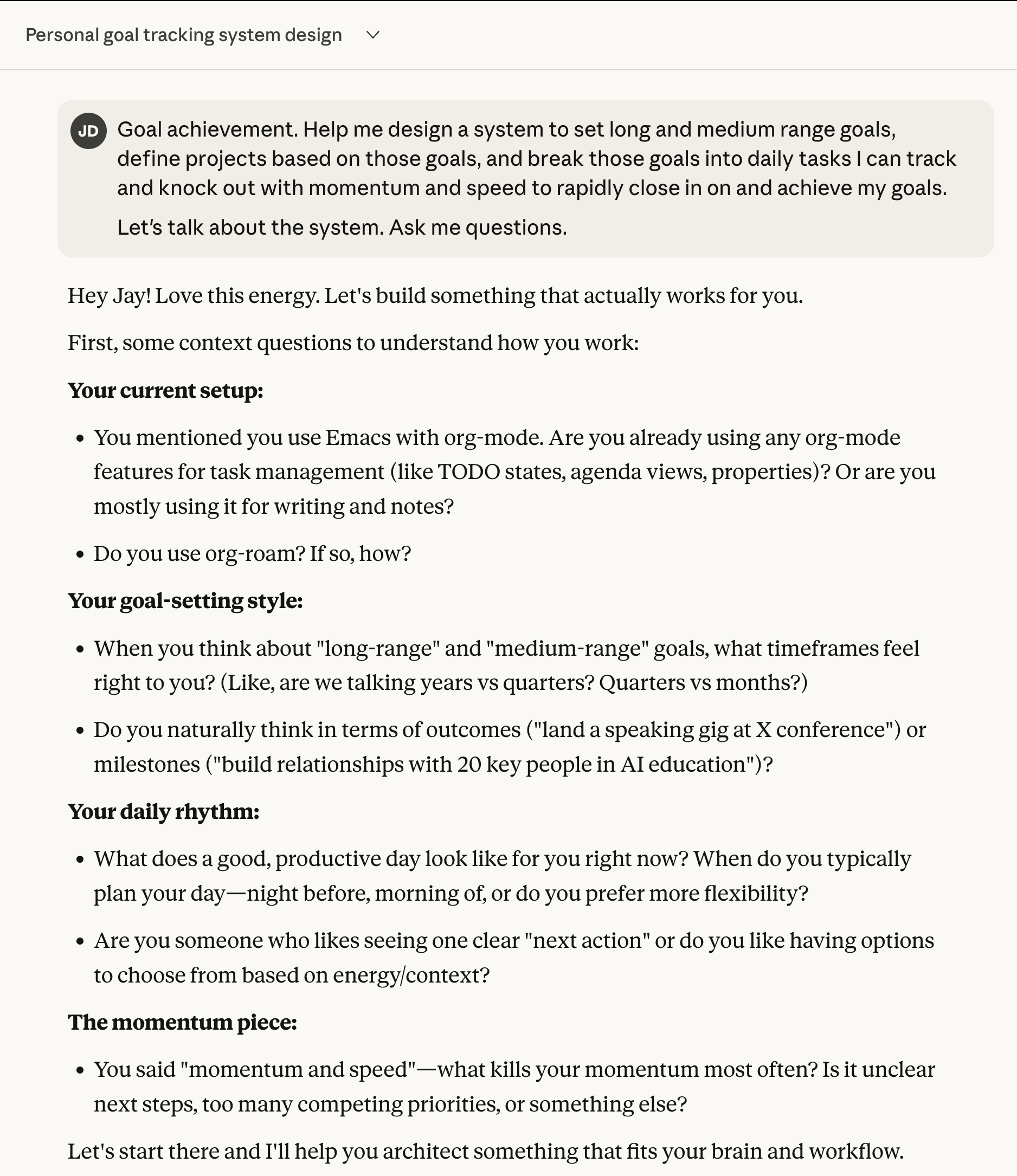

Human-Plus Outputs: Edge to Edge

As AI begins to edge past expert humans, we need language for these “better-than-human” answers.

Magna nunc senectus torquent per fusce sapien ligula tempus cra

We have not yet reached AGI🌐. But in many areas, we are getting close. For some tasks and questions, AI already gives answers better than almost any human.

And I’m not talking about math. I’m talking about natural language question and answer. The experience of asking ChatGPT a question, and getting an answer that makes me think, “Wow. I could not have given a better answer than that, and I doubt there are many people who could.” This phenomenon has been documented in recent research by Wei et al. 2022

I think it’s useful to document these things. So, as we approach AGI, I propose we adopt terminology — a common language — to talk about Prompts🌐 that yield outputs that are better than even intelligent, informed humans.

Test dashes: – should become en dash, — should become em dash.

So far, the only term I’ve seen used for this concept is “superhuman🔗.” But that isn’t right, because I’m not talking about performance that far exceeds human performance, 1000x. I’m just talking about an answer that makes me go “Wow. That is a damn good answer.”

I propose the phrase “human-plus outputs.” If we think of parity as being “human-grade,” then “human-plus” means at least marginally better than most human experts.

Example:

Tom Ford